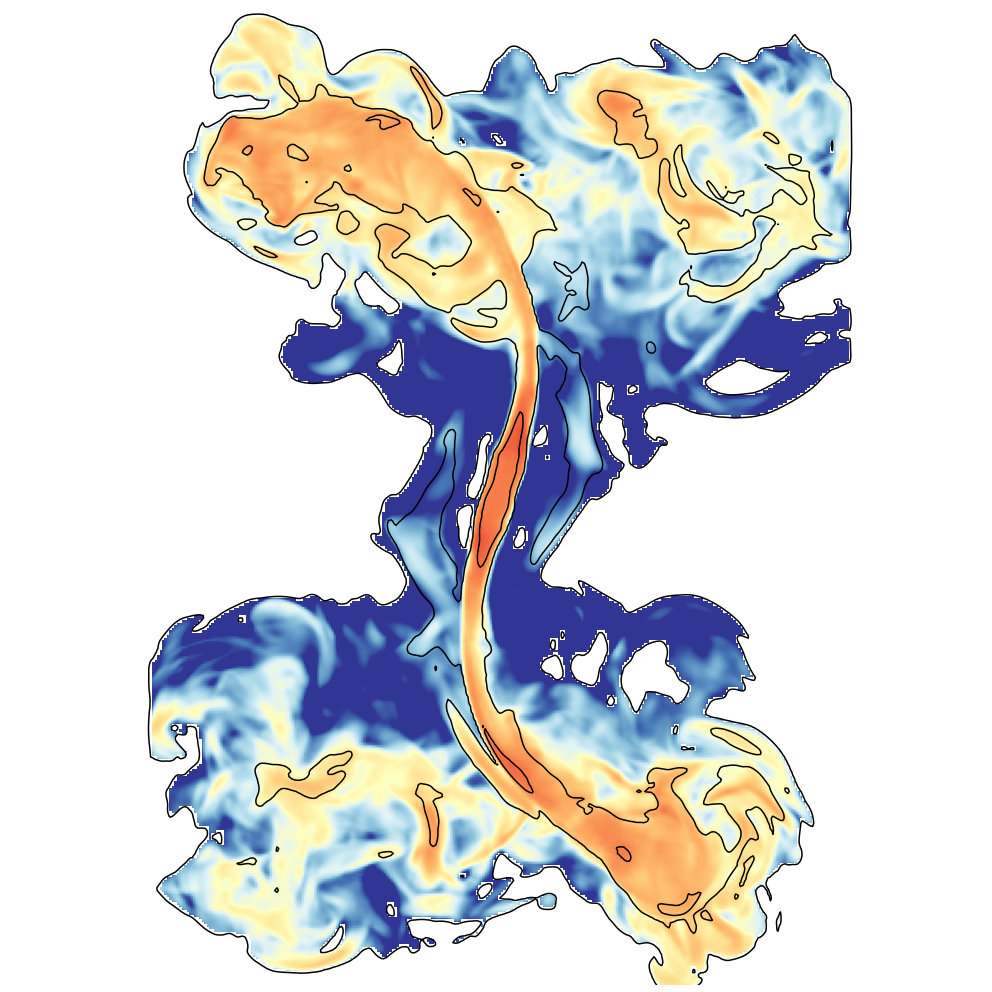

A new paper utilized the Wombat code to study the evolution of radio galaxy jets that are precessing. By utilizing the cosmic ray physics in Wombat, synthetic radio images are shown which can be directly compared to radio observations to better understand the dynamics of these types of sources.

Modeling emissions from an AGJ jet -- galaxy collision

A recent study using the Wombat code has modeled the interaction of a radio jet from an active galaxy that appears to be impacting on and triggering star formation in a dwarf galaxy. We look at the radio properties of this source and compare to synthetic observations from simulations to constrain the properties of this system.

New Heights in Weak Scaling

Weak scaling describes the ability of an implementation to solve large problems on “equally” large computers. In practice, one simulates a typical test problem on a part of a large machine and then doubles its size (read: resolution) and the number of compute nodes. Ideally, the execution time should remain the same independent of the size of the problem. WOMBAT was designed around this problem and makes the test incredibly easy. The problem is defined on a per MPI rank/node basis in the parameter file, so one really only has to increase the size of the machine, the problem automatically increases by the same amount.

Weak scaling really measures the growth of non-parallelizable work in a program on large machines. Generally speaking, this can be branching, load imbalance and communication overhead - with the latter two being the big hit. The general strategy is to distribute work evenly to keep load imbalance low and to asynchronously overlap communication and work to hide data transfer latency. In WOMBAT, we are using a thread-level asynchronous communication strategy to get close to hardware communication latencies, i.e. the interconnect (read here about Cray’s brand new Shasta network). More importantly, we are exclusively choosing algorithms that allow node localized asynchronous communication. For example, we cannot use Fast Fourier Transforms (FFTs) to solve elliptic PDEs like the Poisson equation, because it requires a synchronized communication step that does not scale.

It seems our efforts have not been in vain.

Recently, the OpenMPI collaboration used WOMBAT-public to demonstrate weak scaling to 512.000 KNL CPUs on the Trinity machine at Los Alamos National Laboratory:

Weak scaling of Wombat on Trinity: Runtime over compute size for different MPI libraries (MPT=Cray, OMPI=OpenMPI) and different number for OpenMP thread per node (Source: OpenMPI Collaboration).

The figure above shows the weak scaling test for WOMBATs TVD solver, but we’d expect it to be the same for the WENO5 solver. For 2 MPI Ranks per node = 32 threads, the runtime remains constant between 4000 and 512.000 cores ! This was likely one of the largest Eulerian grid simulations run to date, even if the runtime was only one minute ;-)

Weak scaling tests with longer runtimes than a few minutes for 100 steps hide communication inefficiencies in computational work and thus are pretty meaningless - the idea is to expose the communication overhead. Step times should be in the regime of a few seconds and the runtime should fluctuate by ~10% due to hardware limitations and other users on the system. You can see in the figure that obviously something goes wrong for MPT in some runs and the Runtime increases needlessly. Something to look into for Nick, Krishna and the Cray MPT team in Minnesota, I suppose.

The result shows that WOMBAT is really designed to be an exa-scale implementation in terms of communication and latency. The code is already used to test MPI libraries on largest scales, which is very good for code portability as well. What we require to make the final step to 10^18 Flops is to port our fluid solvers to accelerators using OpenMP 5. Of course we knew that beforehand, so the WENO5 implementation is already written with this in mind. Computation and memory operations are explicitly exposed, so a first port can probably be achieved in a week or two.

All we need are the computers, but at least in the US there is a clear roadmap available:

US Department of Energy roadmap towards the Exascale (Source).

The figure above is already a bit outdated. Today we know that NERSC-9 will be called Perlmutter (context), a Cray system with AMD CPUs, NVIDIA GPUs and an insane 30 PByte, 4TB/sec of SSD storage ! We recently applied for early access for our first cosmological simulations through the NESAP call, also to tap into that storage system with Julialang in post-processing.

The Argonne Exascale system will be called Aurora and is build by Cray and Intel. One can only speculate what type of accelerator will drive that system, but it’ll will likely not run NVIDIA’s CUDA. Our choice to go with OpenMP 5 for accelerators seems to be a save bet for portability, even if it costs a bit of performance. At the 10-20% level, the convenience in maintaining the code will likely pay off as well. In academia we are mostly limited by man-power, rarely by compute power.

Cori is our target system for a first unigrid 5th order cosmological simulation end of 2019, while Perlmutter is required for a zoomed cluster simulation in 2020/21. For the latter runs WENO5 on accelerators is a necessity. We will attempt to reach a significant fraction of peak on these systems in cosmological simulations. With the current weak scaling results (5% on Hazel Hen) this seems well feasible - wouldn’t you agree ?